Oscar Dominguez

Global Principal Geoscientist QAQC, Technical Centre of Excellence, BHP, Perth, Australia. [email protected]

DOI: https://doi.org/10.1255/sew.2021.a5

© 2021 The Author

Published under a Creative Commons BY-NC-ND licence

Business decisions in society and across a wide swath of industry sectors are often data-driven, making sampling reliability and analytical data quality of paramount importance. Quality Management (QM) plays a vital role in the Quality Assurance/Quality Control process. Oscar Dominquez here presents the critical role of QM in the mining sector, where everything is BIG: tonnages, challenges, environmental impact, profits, risks—illustrating how proper sampling is a major critical success factor also here. But the mining sector view is not unique; the QM prerogatives can be carried over to very many other sectors as well.

Mining: where everything is BIG

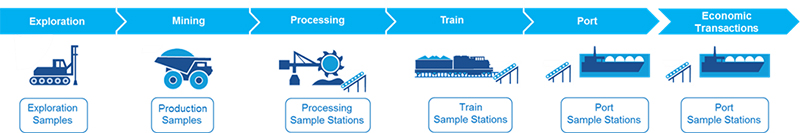

In the mining sector, decisions and investments in exploration, infrastructure construction, mining operations, ore processing and transportation require multi-million dollar capital and operating budgets, but critical decisions can be based only on very small samples (of the order of a few grams) that are supposed to represent thousands of tons. It is clear that the compound, complex lot-to-analysis pathway must be representative in all stages, Figure 1. The Theory of Sampling (TOS) is a self-evident element in the full Quality Management (QM) scope.

Figure 1. The mining value chain. Rectangles indicates where samples (and their analytical results) are used to support critical business decisions.

This was one of the most influential observations that led Pierre Gy to develop the TOS and later led researchers such as Dominique Francois-Bongarcon and Francis Pitard, among others, to promote, convince, quantify and demonstrate to executives and mining professionals the severe risks to which businesses expose themselves should they compromise sample quality in a misguided attempt to reduce costs. Over many decades, examples of this practice have been accumulating, but not many have been published (for obvious reasons). It will suffice to refer to two major communications from the sampling world.1,2

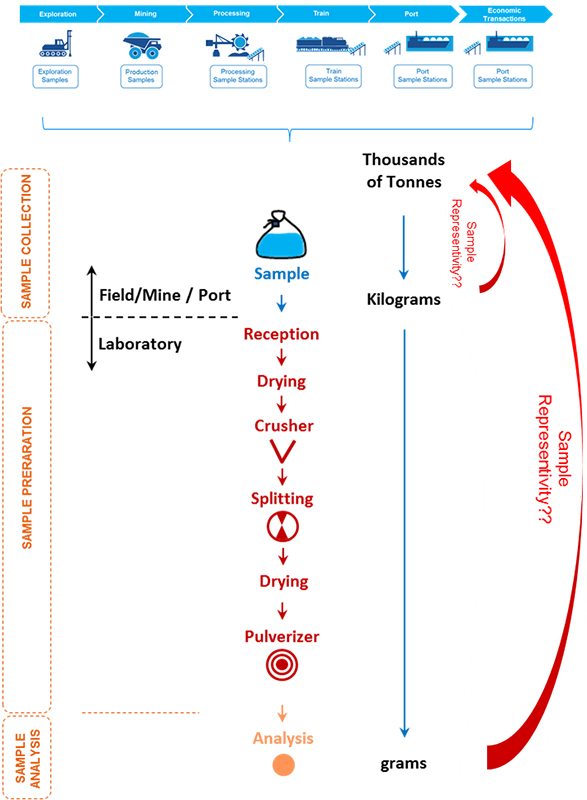

In this context, supervisory programmes have been developed to establish Quality Assurance/Quality Control (QA/QC) parameters that monitor correct execution of sampling protocols and control each stage of the “sampling cycle” [sample collection, preparation and analysis (method)] to preserve, quantify and ensure sample representativity, Figure 2.

Figure 2. Schematic diagram showing a generalised process and appropriate quality requirements for samples collected across the full mining value chain. The main goal of a quality programme is highlighted: to preserve, quantify and ensure sample representativity.

QA/QC reports commonly include statistical–numerical results that quantify performance of QA/QC controls (field duplicates, preparation duplicates, blanks, standards etc.). Graphics such as scatter plots, QQ plots, histograms and cumulative frequencies are used to represent the results graphically. Statistical values normally include, for example, relative differences, absolute differences, relative variance, averages, T-test and Z-scores, which are used to quantitatively express the relationship between duplicate pairs… However, is an effective quality programme simply just a statistical exercise? And will pairwise comparisons be able to detect all possible wrongdoings (especially be able to detect a sampling bias)?

The following discussion considers these questions in the context of a quality programme standard as outlined by the JORC code, that is intended to highlight and emphasise a call to return to basics during this era of new technological applications and advanced statistical analysis.

The case for proactivity

This paper aims to highlight the concept of “QM” as the precursor for appropriate corrective actions to close gaps determined by the execution of a quality programme, specifically trend analysis (by ranges time and/or grades), with the aim of proactively determining control performance deviation and thus proactively rectify the source of deviation.

Sometimes, there is confusion among those accountable for QA, and even among auditors, that if individual data points fall within predetermined acceptance limits, they are then necessarily acceptable and, therefore, suitable as a basis for operational and investment decisions. A similar situation is that tabular statistical summaries are enough to demonstrate acceptability of QC outcomes. However, what is stated with respect to QM is that sometimes results found within the acceptance limits can be de facto internally biased, or show material deviations over a period of time, thereby still impacting operational performance. An unstable process which happens to plot within acceptance limits for some restricted time interval is nevertheless an unstable process at large. Thus, true process control requires something more.

QM refers to reliable proactive detection of such “anomalous tendencies”; that is, the trend over time/grade of a given statistic. QM specifically also includes the process by which these trends are understood, communicated and rectified. Some businesses refer to this process as “continuous improvement” or as the “Plan–Do–Check–Act” cycle. In the mining industry, this proactive approach can have significant impact on financial outcomes through sequence optimisation, contract negotiation, and management of plant and processing infrastructure.

Examples of QM in the mining value chain

Below are presented examples of how QM can be implemented throughout the mining value chain, using a proactive approach as guided by JORC Table 1 and how results are typically presented in QA/QC reports or audits.

Sampling (“sample collection”)

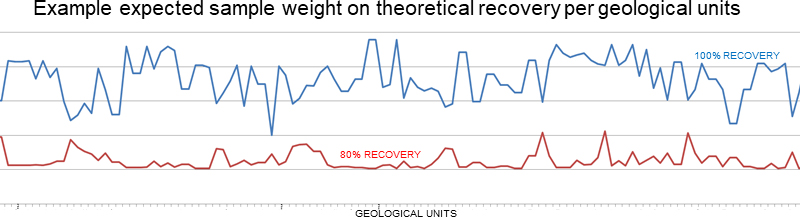

JORC Table 1 provides guidance that drilling campaigns shall deploy measures to maximise sample recovery and representativity. A typical example for a reverse circulation (RC) drilling campaign would be to compare actual sample weights to a theoretical “ideal” drilling recovery, as a function of material density, rod length and diameter, and aperture size of the sample shoot (Figure 3).

Figure 3. Comparing actual sample weights to a theoretical “ideal” drilling recovery.

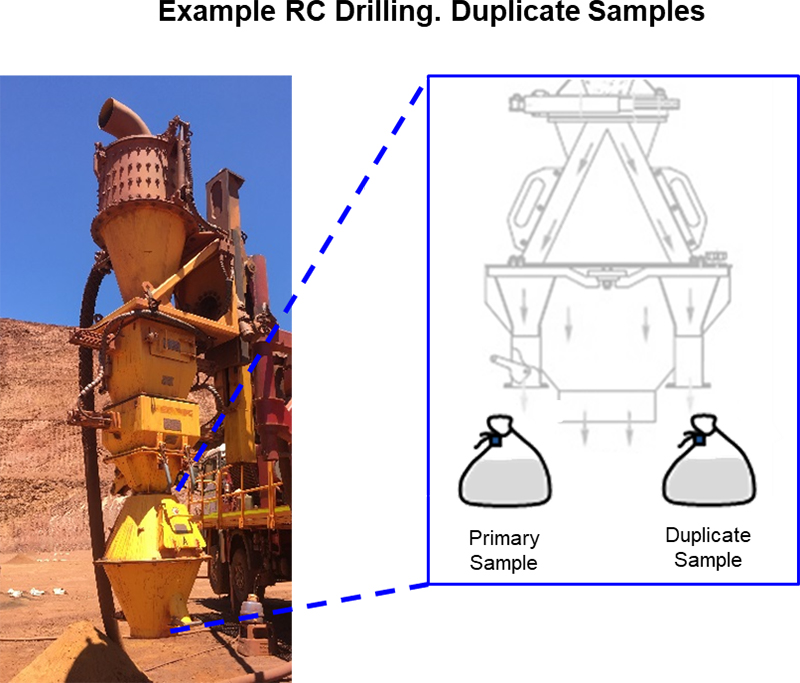

Where duplicate samples are collected, it is expected that they will have similar, if not identical, sample weights. This is considered a satisfactory indication that the rig set-up, sampling devices and drilling/sample collection process are operating according to design, Figure 4.

Figure 4. Duplicate field sample production directly at RC drilling site is considered a satisfactory sampling quality assurance if weight are closely similar.

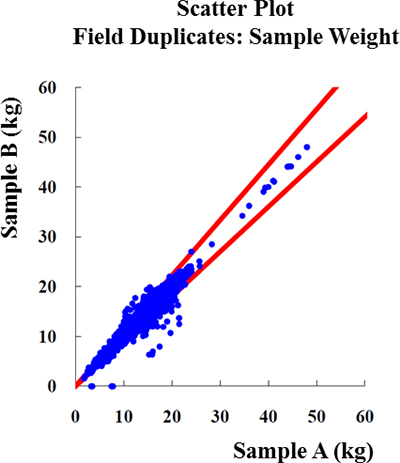

Results are commonly presented as in Figure 5, in which a scatter plot shows the distribution of the results between duplicates. In this example, the scatter plot shows differences in weight outside expected thresholds, between 10 kg and 30 kg; and potentially a small bias towards to sample A being heavier than sample B.

Figure 5. RC field duplicates performance: scatter plot comparison of duplicate sample weight.

However, there are several questions this graph fails to answer: why are A samples systematically larger than B samples? Is this the consequence of a particular drill rig? Or of a particular sampling device? When was the bias first introduced? Is this bias random, or sustained for a period of time? What was done to fix it?

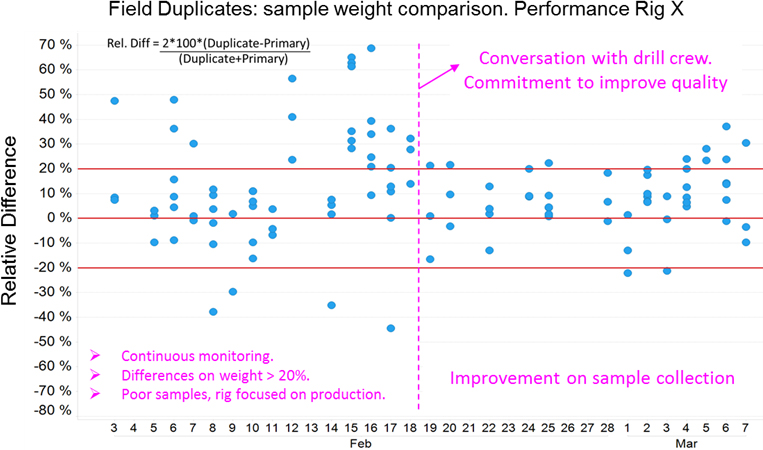

Figure 6 presents an example of how QM practices can proactively improve sample collection by monitoring rig performance in a different way, while still comparing the weight of duplicate samples.

Figure 6. Example of monitoring sample weight on duplicate samples. Quality Assurance (QA): collect sample weight on duplicate samples. Quality Control (QC): sample weight within ± 20 % relative difference. Quality Management (QM): continuous monitoring of the information and actions were results are outside expected thresholds.

Figure 6 can be interpreted as follows: during the first two weeks of drilling in February, weight differences in rig 1 were not performing within accepted thresholds (Relative Difference ± 20 %). A conversation with the drill crew and drilling company supervisor is conducted in the field to explain to the driller the importance of drilling on geological models, to understand the sources of this poor performance, develop an action plan to improve the sampling practice and obtain a commitment to increase sample quality.

Through QM, corrective actions are taken by continuously monitoring results over time. This proactive approach can save thousands of dollars by “doing things right the first time” rather than reviewing QA/QC performance en masse once the drilling campaign is already finished, by which time it is too late, by far!

Sample preparation

Following the same criteria as for Sampling above, the JORC Table 1 benchmark requires evidence that “quality control procedures [are] adopted for all sub-sampling stages to maximise representativity of samples”.

Usually, blanks, duplicate samples and sizing tests are used as a QA tool to monitor the performance of crushers and mills. Later, results are included on QA/QC reports where the performance of crushers and mills are summarised, for example as shown in Figure 7.

Figure 7. Examples of how duplicate samples performance are presented in QA/QC reports. (A) and (B) show different type of graphs to visualise and determine the correlation of the samples (A) and the % of data (B) on a certain % of difference, expressed as AMPD.

While these graphs and summary tables are typical in a great many mining practices today, this information does not allow the application of QM to monitor the information in real time and proactively improve the results. How can an improved practice be designed and implemented? Again, time/grade-related trends are key!

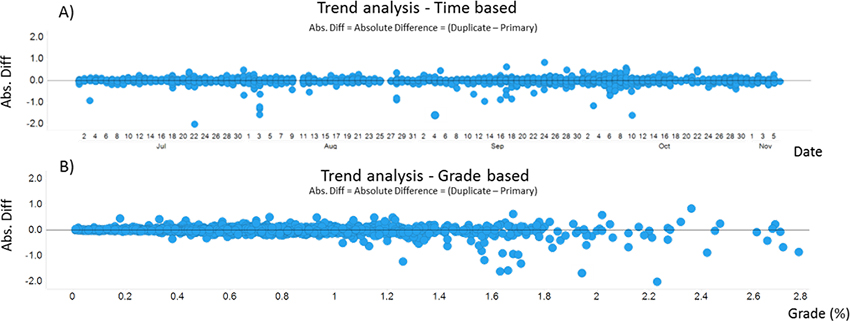

Figure 8 shows an example where a trend analysis is performed both on a time (date) and on a grade basis: A) The Absolute Difference of Duplicate samples is plotted against the date the laboratory has reported the results. The graph does not show major issues over a specific period of time, but if the data is assessed on a grade basis as shown in B), a trend can be in fact be observed and interpreted as the grade of the primary sample being greater than the duplicate sample. The action here will be to talk to the drilling company (if these are field duplicates); or with the team performing the core cutting, or with the laboratory if the data are crusher or pulp duplicates—in order to find the source of this bias, and develop an action plan to fix and close the gap. This real-time assessment and management is the basis for the desired proactive approach. It needs to be highlighted, supplementing reactive activities such as reconciliation results or monthly/quarterly QA/QC reports (if done), where the opportunity for fixing issues in near-real time is lost.

Figure 8. Examples of trend analysis performed on a time and grade basis for duplicate samples (applicable for field, crusher and pulp duplicates). These graphs highlight the value of performing QM both on a date and grade basis: the analysis by time (date) does not reveal any major issue in terms of bias and the results look consistent. However, trend analysis performed on a grade basis highlights a bias at high grades that needs to be reviewed, understood and fixed.

Chemical determination

Certified Reference Materials (CRMs) are extensively used to monitor laboratory performance, and mining companies are obliged to arrange preparation of their own internal Working Reference Materials to perform QM. It is not recommended to rely on internal laboratory QA/QC processes only. Changes in the lab results or consistent biases across time are best detected by an external team accountable for QM, in order to highlight issues within the laboratory, to identify sources of deviations and their production consequences, and to generate an action plan and apply lessons learned to avoid repetitive issues.

Often statistical analyses consider “average values”, which sometimes lead to inaccurate conclusions that assume a process is well controlled “on average”, or “fit for purpose”. QM applies a different approach, assessing data in real time, thereby escaping the use of time-averages, and keeping an appropriate business focus with the aim to ensure consistent and defensible results, supporting sustainable business decisions.

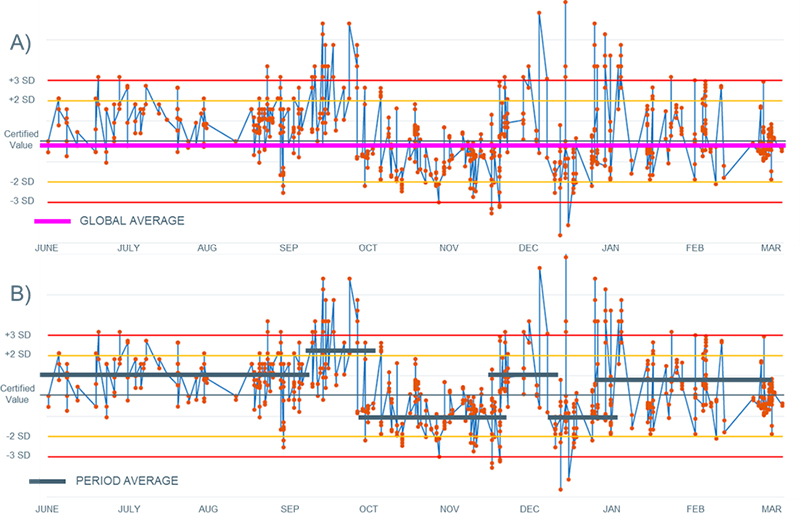

Figure 9 demonstrates the differences between an approach reliant on averages vs QM applied to CRM results (QA = CRMs, QC = ±3 SD and QM = trend analysis). Figure 9A shows 10-months’ performance of a CRM. Because results have been performing mostly within three standard deviations, the business might infer the process is well controlled and would feel confident, given the global average is close to the certified value.

Figure 9. CRM performance showing results performing mostly within three expected standard deviations. A) Global average is very close to the certified value, which can be interpreted as the results are considered valid. B) Period average has been included, showing the significant time-variability of the laboratory performance during individual months.

However, Figure 9B shows the internal variability which the laboratory (period average) is observing over time. This lack of consistency gives rise to operational instability, exposing the business to risks of under- or over-performing at production, processing and compliance to plan results, or leads to variable products.

These are examples of cases where QM becomes important by monitoring information in real time and detecting changes in the performance of the laboratory proactively, thereby ensuring consistency and sustainability of business results.

Conclusions

This column highlighted that a quality programme is not just a statistical exercise, where global averages or standard deviations assure sustainable and consistent QA/QC results. The examples provided demonstrate the value of QM to complement routine QA/QC processes and statistical analysis, enabling a QM proactive approach in which data monitoring will ensure consistent results across time or, over a range of grades, will reduce resource and operational risks, and allow business decisions based on representative and quantified-quality information across the entire value chain.

Indirectly this paper also highlights the value and necessity of having a central (external) QM team which is accountable for governance and for performing appropriate quality-related activities (QA/QC and QM) across both exploration and production.

Finally, QM is currently in vogue and companies have been pushing to be part of “a new era” of new technological applications (sensors) and data analysis (machine learning, conditional simulations etc.), which is trying to provide businesses with real-time data to be used for business decisions in real time etc. This column highlights that both new technology and advanced statistical techniques need to be based on appropriately defined “good quality data”, not just a lot of data. Appropriately good data also needs to be incorporated into simulations and advanced statistical tools. QM becomes a critical success factor to ensure that performance of future technologies are robust—otherwise the old adage still rules: Garbage In–Garbage Out (GIGO).

References

- P. Carasco, P. Carasco and E. Jara, “The economic impact of correct sampling and analysis practices in the copper mining industry”, in “Special Issue: 50 years of Pierre Gy’s Theory of Sampling. Proceedings: First World Conference on Sampling and Blending (WCSB1)”, Ed by K.H. Esbensen and P. Minkkinen, Chemometr. Intell. Lab. Sys. 74(1), 209–213 (2004). https://doi.org/10.1016/j.chemolab.2004.04.013

- P. Minkkinen and K.H. Esbensen, “Following TOS will save you a lot of money (pun intended)”, Spectroscopy Europe 30(2), 16–20 (2018). https://www.spectroscopyeurope.com/sampling/following-tos-will-save-you-lot-money-pun-intended

Oscar Dominguez

Geologist, Master of Business Administration (MBA), and Geo-Mining-Metallurgical Diploma, with >20 years of international experience developed in the mining industry and multi commodities. Council member of the International Pierre Gy Sampling Association (IPGSA). 16 years working in BHP, currently as Global Principal Geoscientist QAQC, at the Technical Centre of Excellence, providing support on Sampling & QAQC to all the assets and commodities of BHP.