Antony N. Daviesa,b and Christoph Thomasc

aExpert Capability Group – Measurement and Analytical Science, Nouryon Chemicals BV, Deventer, the Netherlands

bSERC, Sustainable Environment Research Centre, Faculty of Computing, Engineering and Science, University of South Wales, UK

cLC/MS Senior Application Specialist, Waters Corporation, Helfmann-Park 10, 65760 Eschborn, Germany

With pressure increasing on spectroscopists to deliver results at ever lower limits of detection in often increasingly wider, more “impure” sample types, the recent 6th Workshop in Extractables and Leachables in Hamburg1 provided a great opportunity to hear about the developments and trends in the regulatory environment. Delegates could also swap experiences with spectroscopists from different types of business backgrounds. In this column, the focus will be on a combination of the intelligent use of analytical data, in silico chemical structure processing and instrumental hardware development to support us in dealing with ever more peaks that need analysing as they appear out of ever lower levels of background noise. It is also a pleasure to produce a column with an ex-colleague from both ISAS-Dortmund and Waters—especially as Christoph has moved over to organic analysis from the dark side of elemental analysis!

Detection limits? Down down deeper and down!2

Early on in the workshop we were treated to the information that the first cases have appeared in the courts in the USA using data on ppq levels of analytes in complex matrices. So, a quite dramatic scene-setting exercise, which certainly served to focus the minds of the participants on all that was to follow. The broad range of participants from different manufacturing industries, contract research and analytical laboratories meant that the discussions about best practises and dealing with regulatory environment changes were interesting to behold. The application areas were divided between pharma and non-pharma, with some excellent talks around specific companies’ business issues and deployed solutions mixing with vendor presentations on hardware and software improvements. New regulations which are coming into force are also targeting so-called second level suppliers further up the materials supply-chain recognising—as one speaker highlighted—that just ordering a chemical from a different supplier which has the same CAS number doesn’t say anything about the equivalency of the low-level impurity profiles relevant in food-contact and medical device regulations. This will force second-level raw material suppliers to be familiar with the regulatory environment of their customers and their customers’ customers. They must understand their own product offerings in the terms of these customers, especially in delivering better testing and certification of very low-levels of minor impurities. This can be challenging for such raw material suppliers as often only a tiny proportion of their total manufacturing output will end up in products falling under medical device or food-contact regulations. Consequently, the level of quality control required may not be currently present at supplier facilities.

We have been blessed by instrumentation developments in recent years which can deliver quantitative results for very low levels of analytes in quite complex matrices. If we combine accurate mass spectrometric detection with, for example, an additional sample “clean-up” stage such as ion mobility spectrometry, we can hit most of the limits of detection demanded by our regulators—provided we know what we are looking for.

Targeted, non-targeted and something in-between?

As we have discussed before in this column, the hardware is moving into areas where we can expect a flood of data. This needs to be handled in an intelligent and rapid way if we are not to be swamped in the process of converting the data into usable, relevant information. To achieve this, we require rapid, clever data handling approaches which allow us to combine the “possible” with a strong dose of common sense to eliminate the less likely solutions for trace substance identification.

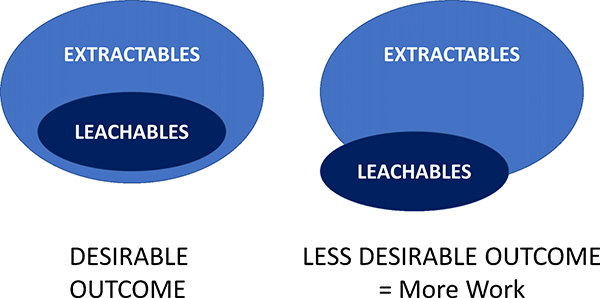

To understand the approaches outlined by different presenters it is worth having a quick look at some of the definitions of extractables and leachables which vary between companies and between regulatory bodies. This isn’t helped as there is little unified guidance or standards for extractables and leachables testing. An attempt to distil the essential differences is shown below.

Extractables: Chemical species that are released from materials under accelerated laboratory testing conditions such as exaggerated temperatures, solvent strengths, pH or surface exposure levels.

Leachables: Chemical species that are released from container materials, packaging or medical devices as a result of direct contact with the contained product, foodstuff, drug or humans. They are often called migrants. In many cases the real drug formulation or foodstuff cannot be used for initial testing so close simulants (placebo) take their place.

These chemical species can be further classified as IAS (intentionally added substances) used in the manufacturer of the product, such as monomers or other formulations components, or NIAS (non-intentionally added substances) which can be starting material impurities, unwanted reaction products formed during the manufacturing process or even impurities formed as a desired material undergoes breakdown.

As you can well imagine, what comes out of such testing for extractables and leachables is often a complex mixture of non-volatiles, volatile and semi-volatile chemical entities, as well as extractable metals (think of pigment inks used in packaging applications).

Extractable testing is usually carried out first to identify the potential target chemical long-list which may be observed by the longer term, more natural use condition leachate studies. Often the chemicals observed in a leachables study are a small subset of those observed by the more aggressive extractables work (Figure 1).

Figure 1. Often chemical species seen in leaching studies have already been identified in an early extractables work. If not, then these new chemical entities will need isolating and structural characterisation work carried out by, for example, nuclear magnetic resonance spectroscopy.

In most cases quantitation needs to be carried out using reference standards at the concentration levels of the extractables. Here it is also important to consider all the sampling steps required prior to the spectrometer. Impurities in the testing matrices, contamination during extraction and preconcentration steps, and in some cases from the use of inappropriate containers in the laboratory itself can also lead to very low-level contaminants being wrongly identified.

Non-targeted screening is an expensive and often inconclusive process. Missing compounds in our reference libraries often mean full structural elucidation is required on each peak identified above the noise level to determine if, for example, it is an unexpected carcinogen needing quantitative analysis to ensure it is below regulatory limits.

Targeted screening is simpler to carry out as you begin with a well-defined list of target analytes with reference materials available for each analyte and can work with optimised data acquisition settings for those analytes. Identification is simplified by the presence of expected mass fragment patterns/accurate masses and chromatographic retention indices. For more complex samples, the CCS (collisional cross section) from an ion mobility separation stage can also help not only in lowering background noise but also in getting cleaner spectra and, therefore, in identifying the analyte of interest with more confidence. Ion mobility spectrometry also has the capability to separate isomers when their collisional cross sections are different, but their conventional gas chromatography or liquid chromatography retention times and mass fragmentation patterns alone cannot separate them.

One advantage in working in this area is that the extractables testing means that it is possible to carry out “suspect screening” comparison against reference libraries of compounds often populated by materials used in the product manufacturing processes and those seen in previous extractables testing of similar formulations where, hopefully, analytical reference compounds are available. This reference library generation helps reduce the additional non-targeted testing workload.

Although our state-of-the-art spectrometers can deliver useful data at previously un-hoped for low analyte concentrations, library searching isn’t really new. So how can we go one step further?

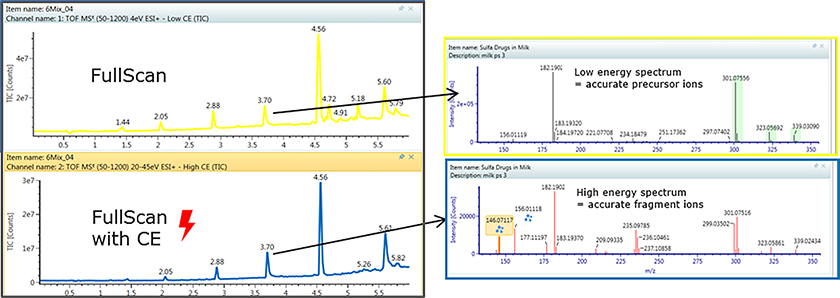

Unifying advanced instrumentation with automated data analysis and in silico prediction

The latest product offering from Waters has brought all these concepts together into a single customisable workflow package for the high-resolution time-of-flight instruments like the Xevo G2-XS Q-TOF or the additional ion mobility capabilities in the Vion IMS-QTof. The “MSE” acquisition mode takes both the high-energy fragmentation pattern mass spectra as well as the low-energy precursor accurate mass values. Using true 3D peak recognition helps deliver cleaner spectra with less peak overlap. The accurate mass measurements from unknowns can be processed as in silico candidate structures by taking proposed structures of the unknown analyte and generating in silico fragmentation patterns which are compared for the best fit to the measured results to assist in identification.

Conclusions: the future

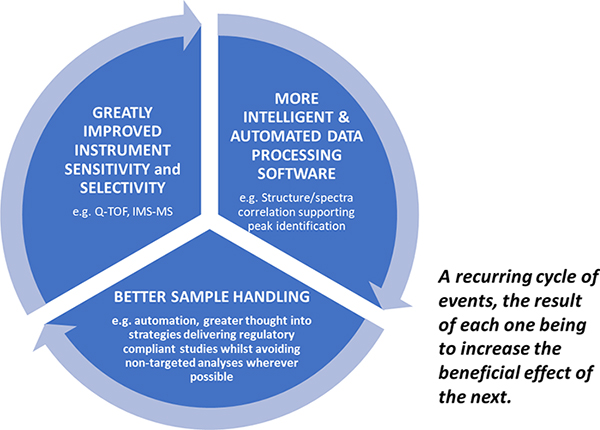

The regulators are clearly looking for evidence of materials which may be detrimental to human health at ever lower levels and further back up the supply chain than ever before. Only by continuing to promote the virtuous circle of innovative spectrometer development with supporting intelligent software advances and cleaner more focussed sample handling can we hope to master the challenge of ppq trace component analysis in complex matrices (Figure 3).

Figure 3. The Virtuous Circle driving ever lower reliable limits of detection in contaminant screening.

Postscript

An interesting recent development was pointed out by one speaker appeared in August’s 2019 adopted amendment to the EU regulations on Food Contact Materials; whilst allowing a new substance, part 4 also contained the following statement:4

The authorisation of the FCM substance No 1059 provided for in this Regulation, requires that the total migration of all oligomers with a molecular weight below 1000 Da does not exceed 5.0 mg/kg food or food simulant. As analytical methods to determine the migration of these oligomers are complex, a description of those methods is not necessarily available to competent authorities. Without that description, it is not possible for the competent authority to verify that the migration of oligomers from the material or article complies with the migration limit for these oligomers. Therefore, business operators placing on the market the final article or material containing that substance should be required to include in the supporting documentation referred to in Article 16 of Regulation (EU) No 10/2011 a description of the method and a calibration sample if required by the method.

Which now appears to oblige all laboratories submitting oligomer migration studies to provide their analytical method as well as calibration samples, as of course no analytical method can be validated with calibration samples. It is not currently known by the authors what procedures for handling these samples has been put in place by the EU nor the confidentiality level of the analytical method submitted with the analytical data.

References

- 6th European Forum on Extractables, Leachables, Medical Devices and Food Contact Materials Testing. Hamburg, 25–26 September 2019.

- F. Rossi and B. Young, Down Down. Vertigo Released 29 November 1974.

- E. van Beelen, C. Thomas and B. Peuser, “Overview of Waters solution for extractable and Leachables studies”, 6th European Forum on Extractables, Leachables, Medical Devices and Food Contact Materials Testing. Hamburg, 25–26 September 2019.

- Commission Regulation (EU) 2019/1338 of 8 August 2019 amending Regulation (EU) No 10/2011 on Plastic Materials and Articles Intended to Come into Contact with Food http://data.europa.eu/eli/reg/2019/1338/oj