Articles and Columns

This column has invited two world-renowned experts in near infrared (NIR) spectroscopy to let the world benefit from decades of leading-edge experience, especially regarding sampling for quantitative NIR analysis.

This article looks at three related spectroscopic techniques/tools in the toolbox, namely, Fluorescence, near infrared (NIR) and Raman; and discuss the “what”, “where” and “how” of these techniques are being used to improve the quality of the measurement processes associated with them.

This column starts to answer the question, “how does one actually find FAIR data?” with a detailed example from Imperial College London.

Despite a multitude of chemical and physical methods capable of detecting fingerprint residues, there are substantial challenges with fingerprint recovery. Spectroscopic methods have played a critical role in the analysis of fingerprints, used to identify the chemical constituents present, examine their degradation over time and compare the chemical variation between donors.

The latest in this series of “Four Generations of Quality” considers the essential component that controls our modern instrument systems and the associated concept of data integrity that is fundamental to the quality of the data being generated.

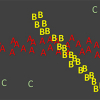

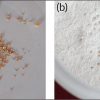

Sampling is nothing more than the practical application of statistics. If statistics were not available, then one would have to sample every portion of an entire population to determine one or more parameters of interest. There are many potential statistical tests that could be employed in sampling, but many statistical tests are useful only if certain assumptions about the population are valid. Prior to any sampling event, the operative Decision Unit (DU) must be established. The Decision Unit is the material object that an analytical result makes inference to. In many cases, there is more than one Decision Unit in a population. A lot is a collection (population) of individual Decision Units that will be treated as a whole (accepted or rejected), depending on the analytical results for individual Decision Units. The application of the Theory of Sampling (TOS) is critical for sampling the material within a Decision Unit. However, knowledge of the analytical concentration of interest within a Decision Unit may not provide information on unsampled Decision Units; especially for a hyper-heterogenous lot where a Decision Unit can be of a completely different characteristic than an adjacent Decision Unit. In cases where every Decision Unit cannot be sampled, application of non-parametric statistics can be used to make inference from sampled Decision Units to Decision Units that are not sampled. The combination of the TOS for sampling of individual Decision Units along with non-parametric statistics offers the best possible inference for situations where there are more Decision Units than can practically be sampled.

This article is about photoacoustic imaging and spectroscopy, and their use for looking inside us, where they have a number of benefits. Hilde Jans and Xavier Rottenberg explain the fundamentals and how new technology may be bringing a new photoacoustics age.

Tony Davies marks the passing of Svante Wold, who gave us “chemometrics”. It all started with a grant application!

Kim Esbensen, along with Dick Minnitt and Simon Dominy, tackle the ever-present dangers in sub-sampling; in this case in the assaying lab of mining companies.

John Hammond continues his Four Generations of Quality series and starts to look at changes that will affect our activities into the future.

John Hammond continues his journey through four generations of quality, this time focusing on some of the specific Quality “tools” in use in both the ISO and GxP environments; how they are defined, applied and used; and how they have evolved with time.

Tony Davies has discovered there is a new UK National Data Strategy and that it is on the right lines: echoing many of his suggestions in this column over many years.

Getting your sampling right can hardly be more important than in the nuclear waste industry. This column describes how the Belgian nuclear waste processing has benefited from the Theory of Sampling, and how it has led to important insights leading to significant potential improvements in the field of radioactive waste characterisation.

This sponsored article describes the RADIAN ASAP, a dedicated direct analysis system, which uses Atmospheric Pressure Solids Analysis Probe (ASAP) technology to analyze solids, liquids and solutions.

The authors have developed the “GlowFlow” mass spectrometry source design based on an Argon flowing glow-discharge that can be retrofitted to existing instrumentation and ionise compounds at atmospheric pressure which are less amenable to ESI.

A Special Section dedicated to examing the “Economic arguments for representative sampling” with contributions from over 20 representative sampling experts.

COLID is a Finding Aid, essentially a “catalogue of catalogues” collating any data source with which it is connected. It collects and provides metadata about basically any resource that you want to incorporate, links endpoints, such as spectra, in a repository or details in a chemicals database, and links it all semantically with any other related resource.

Rodolfo J. Romañach

Department of Chemistry, University of Puerto Rico at Mayagüez

Editor’s summary

Sampling can be seen from many viewpoints: technical, economical, managerial… Here, sampling is described as a critical success factor in business cases, broadening the viewpoints presented above and below.

Trevor Brucea and Richard C.A. Minnittb

aFLSmidth, Boksburg East, 1459, South Africa

bVisiting Emeritus Professor, University of the Witwatersrand, Johannesburg, South Africa

DOI: https://doi.org/10.1255/sew.2021.a45

© 2021 The Author

Published under a Creative Commons BY-NC-ND licence

Melissa C. Gouws

InnoVenton, Nelson Mandela University, Gqeberha (Port Elizabeth), South Africa