Articles and Columns

Researchers from Technische Universität Dresden, reveal new insights into protonated mono and polyamines' behavior by determining pKa values using Fourier transform infrared titration, providing valuable data for chemical analysis.

Under the direction of Editor and Publisher Ian Michael, Spectroscopy Europe, and Spectroscopy World, have been writing about the latest trends in analytical sciences since 1975.

In this issue, you will also learn something of the history of Danish medieval stone churches in northern and western Jutland! “Danish geology icon Arne Noe-Nygaard picks up on an 800 years old sampling and invents the Replication Experiment: PAT in disguise” is firmly rooted in the Theory of Sampling but you will also learn some geology and history!

Tony considers the “Life and death of a data set: a forensic investigation”. Over time, spectral data will become increasingly fragmented and lose important supporting information. Computer and software upgrades, and processing in chemometric programs can all cause this. Of course, the answer is to follow FAIR principles and ensure that they are implemented in the analytical laboratory.

This is the second part of an interview with John Hollerton, the first part in is the last issue! John recently retired from a long career at GSK and we took this opportunity to have a chat with him, as Mohan Cashyap and our beloved editor Ian Michael both have had the opportunity to work with John on projects and on the LISMS conference (Linking and Interpreting Spectra through Molecular Structures). Here, we move the discussion on to technologies and innovation, the great, the over-hyped and the effectively lost to the modern analytical laboratory.

The Assay Exchange paradigm is an integral element in many contractual agreements stipulating how business transactions rely on comparison of two independent assay results for commercial trading purposes. It turns out that the current Assay Exchange vs splitting gap comparison paradigm incurs no less than two unrecognised sampling uncertainties, which leads to hidden adverse economic consequences at least for one—and sometimes for both contractual parties. The magnitude of this unnecessary uncertainty is never estimated, which leaves management without information about potential economic losses, a breach of due diligence. However, all that is needed to resolve this critical issue is stringent adherence to the Theory of Sampling (TOS) by mandatory contractual stipulations of only accepting representative sampling and sub-sampling principles.

Kim Esbensen and Claudia Paoletti hope that a risk assessment scope will provide the sampling community with an easier, and perhaps more powerful, way to reach out to business, commerce, trade as well as regulatory and law-enforcement authorities across many societal sectors. It may also speak a more business-oriented language beyond traditional “TOS technicalities”.

Tony and Mohan Cashyap interview John Hollerton, who has just retired after a career of over 40 years at GSK (and its many previous names). John has been responsible for many aspects of analytical chemistry at GSK. As Tony says, he is “an innovative ideas man with some interesting stories”.

This article describes how gamma-ray spectroscopy can reveal new features in buried archaeological sites. In her case at the Roman settlement of Silchester in Hampshire, UK, and other sites.

LC/MS/MS analysis of PFAS at ultra-trace levels requires mitigation to both liquid chromatograph and mass spectrometer to eliminate the leaching of fluorochemicals from components within the systems. Manual SPE configurations also require mitigative steps to eliminate any components constructed of PTFE to minimise or eliminate any PFAS contamination.

This article describes a really interesting use of spectroscopic data processing from optical fibre cables.

“Error” and “uncertainty” are being used interchangeably and confusingly. This is “a scientific flaw of the first order”! However, Kim and Francis will put you right.

John Hammond finishes his magnum opus on “Four Generations of Quality” with a look at what is science fiction and what is science fact. He considers what may turn out to be “fact” in the future for each of the preceding eight articles in the series.

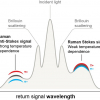

Non-linear spectroscopy can have various applications in different fields due to the accuracy and resolution it provides. This article describes a few possible applications that show the importance of non-linear spectroscopy.

A Raman spectroscopy method was optimised to examine the chemical changes of aspirin tablets after interaction with helium temperatures.

Sampling and Vikings seems to be the next unexpected connection within Kim Esbensen’s Sampling Column. Kim has been exploring an area of Southern Norway from where the founder of the Theory of Sampling, Pierre Gy, believed his ancestors originated. You will have to read the column to find the “smoking axe”! Oh, and there is an interesting report on the 10th World Conference on Sampling and Blending.

Tony Davies has started a timeline of significant spectroscopic system developments aligned with Queen Elizabeth’s reign as recently celebrated in her Platinum Jubilee. Jumping from Princess Anne the Princess Royal’s birth to Heinrich Kaiser certainly makes for a novel approach! Tony hopes that we can turn this into an online resource with your help.

John Hammond has taken a break from his Four Generations magnum opus and reports on the recent meeting of the ISO technical committee on reference materials (ISO TC 334).

This article describes MALDI imaging’s potential uses in pathology applications, and the benefits of the technique to map hundreds of biomolecules (proteins, lipids and glycans, for example) in a label-free, untargeted manner or for imaging target proteins using a modified immunohistochemistry protocol, often from a single tissue section.

This article describes the use of synchrotron X-ray fluorescence and absorption spectroscopies to image metals in the brain.